The fruit fly Drosophila melanogaster. Picture courtesy of Karolinska Institute, Sweden.

We can now put together our proposals on the nature of intentional agency in organisms that are undergoing operant conditioning. In making these proposals I am heavily indebted to Prescott (2001), Abramson (1994, 2003), Dretske (1999), Wolf and Heisenberg (1991), Heisenberg, Wolf and Brembs (2001), Brembs (1996, 2003), Cotterill (1997, 2001, 2002), Grau (2002), Beisecker (1999) and Carruthers (2004).

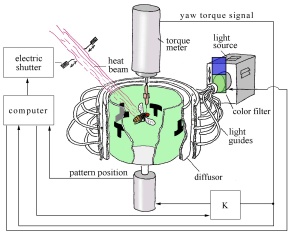

The following discussion is based on experiments described by Heisenberg (1991), Heisenberg, Wolf and Brembs (2001), and Brembs (1996, 2003), with the fruit fly Drosophila melanogaster at the flight simulator.

Readers are strongly advised to familiarise themselves with the experimental set-up, which is described in the Appendix, before going any further. I also discuss evidence for so-called "higher-order" forms of associative learning (blocking, overshadowing, sensory pre-conditioning (SPC) and second-order conditioning (SOC)) in fruit flies, before reaching the following negative conclusion:

L.17 The occurrence of higher-order forms of associative learning in an organism do not, taken by themselves, warrant the conclusion that it has cognitive mental states.

In this section, I present what I believe to be a list of sufficient conditions for the occurrence of intentional agency, in the context of operant conditioning. I describe these conditions thematically, under several headings.

Innate preferences

A biologically significant stimulus (the unconditioned stimulus, or US) figures prominently in all forms of associative learning. The animal's attraction or aversion to this stimulus is taken as a given. In other words, associative learning is built upon a foundation of innate preferences.

There is another more fundamental reason why innate preferences are important: they reflect the organism's underlying biological needs. In part A, I argued (conclusion B.3) that we can only meaningfully ascribe mental states to animals that display selfish behaviour, which is directed at satisfying their own built-in biological needs.

The experimental set-up depicted above for monitoring the operant behaviour of the fruit-fly (Drosophila melanogaster) is constructed on the assumption that fruit-flies have an innate aversion to heat, and will therefore try to avoid an infra-red heat-beam. The flies in the experiment face a formidable challenge: they have to "figure out" what to do in order to shut off a heat beam which can fry them in 30 seconds.

Innate Motor Programs, Exploratory Behaviour and Action selection

In the experiment, the tethered fruit fly is placed in in a cylindrical arena which is capable of rotating in such a way as to simulate flight, even though the fly is stationary. The fly has four basic motor patterns that it can activate - in other words, four degrees of freedom. It can adjust its yaw torque (tendency to perform left or right turns), lift/thrust, abdominal position or leg posture (Heisenberg, Wolf and Brembs, 2001, p. 2).

The fly selects an appropriate motor pattern by a trial-and-error process of exploratory behaviour. Eventually, it manages to stabilise the rotating arena and prevent itself from being fried by the heat beam:

As the fly initially has no clue as to which behavior the experimenter chooses for control of the arena movements, the animal has no choice but to activate its repertoire of motor outputs and to compare this sequence of activations to the dynamics of arena rotation until it finds a correlation (Heisenberg, Wolf and Brembs, 2001, p. 2).

Prescott (2001, p. 1) defines action selection as the problem of "resolving conflicts between competing behavioural alternatives". The behavioural alternative (or motor pattern) selected by the fly is the one which enables it to avoid the heat. In the experiments described above, the fly engages in action selection when undergoing operant conditioning, and also when it is in "flight-simulator mode" and "switch-mode" (see the Appendix for a definition of these terms).

However, it has been argued above (Conclusions A.11, A,12 and A.13) that "action selection" - even if centralised - does not, by itself, warrant a mentalistic interpretation, as it can be generated by fixed patterns of behaviour. For instance, the meandering behaviour of flatworms can be explained in terms of four general motor patterns, but these patterns of behaviour appeared to be fixed, I argued that they should not be regarded as manifestations of intentional agency (conclusion A.13).

Even when an animal's patterns of behaviour are flexible and internally generated, as is the case with instrumental conditioning, a mind-neutral goal-centred intentional stance appears adequate to characterise the behaviour. In part B, I concluded that instrumental conditioning, per se, does not warrant the ascription of mental states to an animal (conclusion L.15).

Fine-tuning

I then argued that an organism had to be capable of fine-tuning its bodily movements before it could be identified as having cognitive mental states (conclusion A.14).

Fine-tuning was described as a refinement of action selection. More specifically, I defined it as an individual's act of stabilising a basic motor pattern at a particular value or confining it within a narrow range of values, in order to achieve a goal that the individual had learned to associate with the action.

As we have seen, a tethered fly has four basic motor programs that it can activate. Each motor program can be implemented at different strengths or values. A fly's current yaw torque is always at a particular angle; its thrust is always at a certain intensity, and so on. In other words, each of the fly's four motor patterns can be fine-tuned.

In the fruit-fly experiments described above, flies were subjected to four kinds of conditioning, the simplest of which is referred to by Brembs (2000) as pure operant conditioning and by Heisenberg, Wolf and Brembs (2001) as yaw-torque learning. But if we follow the naming convention I proposed in part B, the flies' behaviour might be better described as instrumental conditioning, as the essential ingredient of fine-tuning appears to be absent. As Heisenberg (personal email, 6 October 2003) points out, all that the flies had to learn in this case was: "Don't turn right." The range of permitted behaviour (flying anywhere in the left domain) is too broad for us to describe this as fine-tuning. Only if we could show that Drosophila was able to fine-tune one of its motor patterns (e.g. its thrust) while undergoing yaw torque learning could we then justifiably conclude that it was a case of true operant conditioning.

Abramson has expressed scepticism regarding the occurrence of fine-tuning in invertebrates:

I would be more convinced that an invertebrate has operant responses if they can adjust their, for example, swimming speed to fit the contingencies. These studies have not been performed (personal email, 2 February 2003, italics mine).

I would suggest that the behaviour of the above-mentioned fruit-flies in flight simulator (fs) mode, answers Abramson's challenge. In this mode of conditioning, the flies had to stabilise a rotating arena by modulating their yaw torque (tendency to turn left or right), and they also had to stay within a safe zone to avoid the heat. In other experiments (Brembs, 2003), flies were able to adjust their thrust to an arbitrary level that stopped their arena from rotating. The ability of the flies to narrow their yaw torque range or their thrust to a specified range, in order to avoid heat, surely meets our requirements for fine-tuning. We can conclude that Drosophila is capable of true operant behaviour.

(Grau (2002, p. 85), following Skinner, proposed a distinction between instrumental conditioning and operant learning, where "the brain can select from a range of response alternatives that are tuned to particular environmental situations and temporal-spatial relations" (italics mine). The terminology used here accords with the notions of action selection and fine-tuning, although the language is a little imprecise. It would be better, I suggest, to say that the brain first makes a selection from a range of "response alternatives" (motor patterns) and then fine-tunes it to a "particular environmental situation".)

Other requirements for conditioning: a current goal, sensory inputs and associations

Both instrumental and operant conditioning are based on the assumption that the animal, in addition to having innate goals, also has a current goal: for instance, the attainment of a "reward" or the avoidance of a "punishment". In this case, the fly's current goal is to avoid being incinerated by the heat beam.

Sensory input can also play a key role in operant conditioning: it informs the animal whether it has attained its goal, and if not, whether it is getting closer to achieving it. A fly undergoing operant conditioning in sw-mode or fs-mode needs to continually monitor its sensory input (the background pattern on the cylindrical arena), so as to minimise its deviation from its goal (Wolf and Heisenberg, 1991; Brembs, 1996, p. 3).

By contrast, a fly undergoing yaw torque learning has no sensory inputs that tell it if it is getting closer to its goal: it is flying blind, as it were. The only sensory input it has is a "punishment" (the heat beam is turned on) if it turns right.

Finally, an animal undergoing conditioning needs to be able to form associations. In this case, the fly needs to be able to either associate motor commands directly with their consequences (yaw torque learning) or associate them indirectly, by forming direct associations between motor commands and sensory inputs (changing patterns on the fly's background arena), and between these sensory inputs and the consequences of motor commands.

Internal representations and minimal maps

My conclusion (conclusion A.14) that an animal must be capable of fine-tuning its bodily movements before it can be identified as having cognitive mental states tightened the conditions for agency, but invited another question: even if an animal can learn how to fine-tune its motor patterns in order to obtain a goal, why should its behaviour warrant an agent-centred description rather than a mind-neutral goal-centred one?

I attempted to answer this question by searching for an extra ingredient that would give us a list of sufficient conditions for intentional agency. An investigation of the internal representations which mediate fine-tuning behaviour seemed to be the most promising avenue for inquiry. I examined Dretske's (1999, p. 10) proposal, that in operant conditioning, an organism's internal representations are harnessed to control circuits "so that behaviour will occur in the external conditions on which its success depends". Although Dretske espoused a mentalistic account of operant conditioning, his notion of representation - even when combined with the notion of fine-tuning - did not seem rich enough to justify a mentalistic interpretation of operational conditioning. A more robust concept of representation was obviously needed.

What I am proposing here is that the representational notion of a minimal map (which I define below) is the missing ingredient we have been looking for. A minimal map is what warrants a mentalistic account of operant conditioning.

The map metaphor for belief is by no means new. Its clearest articulation can be found in Ramsey (1990):

A belief of the primary sort is a map of neighbouring space by which we steer. It remains such a map however much we complicate it or fill in details (1990, p. 146).

Before I explain what I mean by a minimal map, I would like to make it clear that I am not claiming that all or even most beliefs are map-like representations. What I am proposing here is that Ramsey's account provides us with a useful way of understanding the beliefs which underlie operant behaviour in animals, as well as three other kinds of agency (spatial learning of visual landmarks, tool making and social learning, which I discuss below).

What do I mean by a minimal map? Stripped to its bare bones, a map must do three things: it must tell you where you are now, where you want to go, and how to get to where you want to go. The phrase "where" need not be taken literally as referring to a place, but it has to refer to a specific state - for instance, a specific color, temperature, size, angle, speed or intensity - or at least a relatively narrow range of values.

A minimal map need not be spatial, but it must represent specific states.

Definition - "minimal map"

A minimal map is a representation which shows:

(i) an individual's current state,

(ii) the individual's goal and

(iii) a suitable means for getting to the goal.

A minimal map can also be described as an action schema. The term "action schema" is used rather loosely in the literature, but Perner (2003, p. 223, italics mine) offers a good working definition: "[action] schemata (motor representations) not only represent the external conditions but the goal of the action and the bodily movements to achieve that goal."

A minimal map is more than a mere association. An association, by itself, does not qualify as a minimal map, because it does not include information about the individual's current state.

On the other hand, a minimal map is not as sophisticated as a cognitive map, and I am certainly not proposing that Drosophila has a "cognitive map" representing its surroundings. In a cognitive map, "the geometrical relationships between defined points in space are preserved" (Giurfa and Capaldi, 1999, p. 237). As we shall see, the existence of such maps even in honeybees remains controversial. What I am suggesting is something more modest.

First, Drosophila can form internal representations of its own bodily movements, for each of its four degrees of freedom, within its brain and nervous system.

Second, it can either (a) directly associate these bodily movements with good or bad consequences, or (b) associate its bodily movements with sensory stimuli (used for steering), which are in turn associated with good or bad consequences (making the association of movements with consequences indirect). In case (a), Drosophila uses an internal motor map; in case (b), it uses an internal sensorimotor map. In neither case need we suppose that it has a spatial grid map.

A minimal map, or action schema, is what allows the fly to fine-tune the motor program it has selected. In other words, the existence of a minimal map (i.e. a map-like representation of an animal's current state, goal and pathway to its goal) is what differentiates operant from merely instrumental conditioning.

For an internal motor map, the current state is simply the present value of the motor plan the fly has selected (e.g. the fly's present yaw torque), the goal is the value of the motor plan that enables it to escape the heat (e.g. the safe range of yaw torque values), while the means for getting there is the appropriate movement for bringing the current state closer to the goal.

For an internal sensorimotor map, the current state is the present value of its motor plan, coupled with the present value of the sensory stimulus (color or pattern) that the fly is using to navigate; the goal is the color or pattern that is associated with "no-heat" (e.g. an inverted T); and the means for getting there is the manner in which it has to fly to keep the "no-heat" color or pattern in front of it.

I maintain that Drosophila employs an internal sensorimotor map when it is undergoing fs-mode learning. I suggest that Drosophila might use an internal motor map when it is undergoing pure operant conditioning (yaw torque learning). (I am more tentative about the second proposal, because as we have seen, in the case of yaw torque learning, Drosophila may not be engaging in fine-tuning at all, and hence may not need a map to steer by.) Drosophila may make use of both kinds of maps while flying in sw-mode, as it undergoes parallel operant conditioning.

An internal motor map, if it existed, would be the simplest kind of minimal map, but if (as I suggest) what Brembs (2000) calls "pure operant conditioning" (yaw torque learning) turns out to be instrumental learning, then we can explain it without positing a map at all: the fly may be simply forming an association between a kind of movement (turning right) and heat (Heisenberg, personal email, 6 October 2003).

Wolf's and Heisenberg's model of agency compared and contrasted with mine

My description of Drosophila's internal map draws heavily upon the conceptual framework for operant conditioning developed by Wolf and Heisenberg (1991, pp. 699-705). Their model includes:

(i) a goal;(ii) a range of motor programs which are activated in order to achieve the goal;

(iii) a comparison between the animal's motor output and its sensory stimuli, which indicate how far it is from its goal;

(iv) when a temporal coincidence is found, a motor program is selected to modify the sensory input so the animal can move towards its goal.

These four conditions describe operant behaviour. Finally,

(v) if the animal consistently controls a sensory stimulus by selecting the same motor program,a more permanent change in its behaviour may result: operant conditioning.

The main differences between this model and my own proposal are that:

(i) I envisage a two-stage process, whereby Drosophila first selects a motor program (action selection), and subsequently refines it through a fine-tuning process, thereby exercising control over its bodily movements;(ii) I hypothesise that Drosophila uses a minimal map of some sort (probably an internal sensorimotor map) to accomplish this; and

(iii) because Drosophila does not use this map when undergoing instrumental conditioning, I predict a clearcut distinction (which should be detectable on a neurological level) between operant conditioning and merely instrumental conditioning.

In the fine-tuning process I describe, there is a continual inter-play between Drosophila's "feed-back" and "feed-forward" mechanisms. Drosophila at the torque meter can adjust its yaw torque, lift/thrust, abdominal position or leg posture. I propose that the fly has an internal motor map or sensorimotor map corresponding to each of its four degrees of freedom, and that once it has selected a motor program, it can use the relevant map to steer itself away from the heat.

How might the fly form an internal representation of the present value of its motor plan? Wolf and Heisenberg (1991, p. 699) suggest that the fly maintains an "efference copy" of its motor program, in which "the nervous system informs itself about its own activity and about its own motor production" (Legrand, 2001). The concept of an efference copy was first mooted in 1950, when it was suggested that "motor commands must leave an image of themslves (efference copy) somewhere in the central nervous system" (Merfeld, 2001, p. 189).

Merfeld (2001, p. 189) summarises the history of the notion of an efference copy:

Sperry (1950) and von Holst and Mittelstaedt (1950) independently suggested that motor commands must leave an image of themslves (efference copy) somewhere in the central nervous system that is then compared to the afference elicited by the movement (reafference). It was soon recognised that the efference copy and reafference could not simply be compared, since one is a motor command and the other is a sensory cue (Hein & Held 1961; Held 1961).To solve this problem, various models have been proposed. Merfeld (2001) has developed his own model of how the nervous system forms an internal representation of the dynamics of its sensory and motor systems:

The primary input to this model is desired orientation, which when compared to the estimated orientation yields motor efference via a control strategy. These motor commands are filtered by the body dynamics (e.g., muscle dynamics, limb inertia, etc.) to yield the true orientation, which is measured by the sensory systems with their associated sensory dynamics to yield sensory signals. In parallel with the real-world body dynamics and sensory dynamics, a second neural pathway exists that includes an internal representation of the body dynamics and an internal representation of the sensory dynamics. Copies of the efferent commands (efference copy) are processed by these internal representations to yield the expected sensory signals, which when compared to the sensory signals yield an error (mismatch). This error is fed back to the internal representation of body dynamics to help minimize the difference between the estimated orientation and true orientation (2001, p. 189).

Merfeld's model resembles one developed by Gray (1995):

Analogous to Gray's description of his model, this model (1) takes in sensory information; (2) interprets this information based on motor actions; (3) makes use of learned correlations between sensory stimuli; (4) makes use of learned correlations between motor actions and sensory stimuli; (5) from these sources predicts the expected state of the world; and (6) compares the predicted sensory signals with the actual sensory signals (Merfeld, 2001, p. 190).

The distinctive feature of Merfeld's model is that in the event of a mismatch between the expected and actual sensory signals, the mismatch is used as an error signal to guide the estimated state back toward the actual state. In Gray's model, all action halts during sensorimotor mismatch.

Heisenberg summarises the current state of research into internal representations in the brain of Drosophila:

The motor program for turning in flight, by comparison to other insects, is most likely in the mesothoracic neuromeres of the ventral ganglion in the thorax. We hypothesize that the motor program for turning in flight has a representation in the brain. However, we know nearly nothing about it. We have a mutant, no-bridge-KS49, which by all available criteria (e.g. mosaic studies) is impaired in the central brain and not in the thorax. This mutant in the yaw torque learning experiment can avoid the heat but has no aftereffect. In other words, the motor program snaps back to the whole yaw torque range as soon as the heat is turned off. We take this as a first hint to argue that the restriction of the yaw torque range in the heat training occurs in the brain at an organizational layer above the motor program itself (personal email, 15 October 2003).

Recently, Barbara Webb (2004, Neural mechanisms for prediction: do insects have forward models? Trends in Neurosciences 27:278-282) has reviewed proposals that invertebrates such as insects make use of "forward models", as vertebrates do:

The essential idea [of forward models] is that an important function implemented by nervous systems is prediction of the sensory consequences of action... [M]any of the purposes forward models are thought to serve have analogues in insect behaviour; and the concept is closely connected to those of 'efference copy' and 'corollary discharge' (2004, p. 278).Webb discusses a proposal that insects may make use of some sort of "look-up table" in which motor commands are paired up with their predicted sensory consequences. The table would not need to be a complete one in order to be adequate for the insect's purposes. The contents of this table (predicted sensory consequences of actions) would be acquired by learning on the insect's part. Clearly more research needs to be done in this area.

Actions and their consequences: how associations can represent goals and pathways on the motor map

The foregoing discussion focused on how the fly represents its current state on its internal map, but it left unanswered the question of how the fly represents its goal and pathway (or means to reaching its goal).

If the fly is using an internal motor map, the goal (escape from the heat) could be represented as a stored motor memory of the motor pattern (flying clockwise) which allows the fly to stay out of the heat, and the pathway as a stored motor memory of the movement (flying into the left domain) which allows the fly to get out of the heat. The stored memory here would arise from the fly's previous exploratory activities when it tries out various movements to see what will enable it to escape the heat. (I must stress that the existence of such motor memories in Drosophila is purely speculative; they are simply posited here to explain how an internal motor map could work, if it exists.)

Alternatively, if the fly is using an internal sensorimotor map, the goal is encoded as a stored memory of a sensory stimulus (e.g. the inverted T) that the fly associates with the absence of heat, while the pathway is the stored memory of a sequence of sensory stimuli which allows the animal to steer itself towards its goal.

These representations presuppose that the fly can form associations between things as diverse as motor patterns, shapes and heat. Heisenberg, Wolf and Brembs (2001, p. 6) suggest that Drosophila possesses a multimodal memory, in which "colors and patterns are stored and combined with the good and bad of temperature values, noxious odors, or exafferent motion". In other words, the internal representation of the fly's motor commands has to be coupled with the ability to form and remember associations between different possible actions (yaw torque movements) and their consequences. Heisenberg explains why these associations matter:

A representation of a motor program in the brain makes little sense if it does not contain, in some sense, the possible consequences of this motor program (personal email, 15 October 2003).

On the hypothesis which I am defending here, the internal motor map (used in sw-mode and possibly in yaw torque learning) directly associates different yaw torque values with heat and comfort. The internal sensorimotor map, which the fly uses in fs-mode and sw-mode, indirectly associates different yaw torque values with good and bad consequences. For instance, different yaw torque values may be associated with the upright T-pattern (the conditioned stimulus) and inverted T-pattern, which are associated with heat (the unconditioned stimulus) and comfort respectively.

Exactly where the fly's internal map is located remains an open question: Heisenberg, Wolf and Brembs (2001, p. 9) acknowledge that we know very little about how flexible behaviour in Drosophila is implemented at the neural level. Recently, Heisenberg (personal email, 15 October 2003) has proposed that the motor program for turning in flight is stored in the ventral ganglion of the thorax, while the multimodal memory (which allows the fly to steer itself and form associations between actions and consequences) is stored in the insect's brain, at a higher level. The location of the map may be distributed over the fly's nervous system.

Correlation mechanism

The animal must also possess a correlation mechanism, allowing it to find a temporal coincidence between its motor behaviour and the attainment of its goal (avoiding the heat). Once it finds a temporal correlation between its behaviour and its proximity to the goal, "the respective motor program is used to modify the sensory input in the direction toward the goal" (Wolf and Heisenberg, 1991, p. 699; Brembs, 1996, p. 3). In the case of the fly undergoing flight simulator training, finding a correlation would allow it to change its field of vision, so that it can keep the inverted T in its line of sight.

Self-correction

In part B, a capacity for self-correction was highlighted as an essential condition for holding a belief. Beisecker (1999) argued that animals that are capable of operant conditioning would revise their mistaken expectations and try to correct their mistakes. However, we were not able to identify criteria that would enable us to clearly identify self-correcting behaviour in animals.

One of the conditions that we identified for self-correcting behaviour was that it had to be able to rectify motor patterns which deviate outside the desired range. Legrand (2001) has proposed that the "efference copy" of an animal's motor program not only gives it a sense of trying to do something, but also indicates the necessity of a correction.

However, this cannot be the whole story. As Beisecker (1999) points out, self-correction involves modifying one's beliefs as well as one's actions, so that one can avoid making the same mistake in future. This means that animals with a capacity for self-correction have to be capable of updating their internal representations. One way the animal could do this is to continually update its multimodal associative memory as new information comes to light and as circumstances change. For example, in the fly's case, it needs to update its memory if the inverted T design on its background arena comes to be associated with heat rather than the absence of it.

Finally, self-correction can be regarded as a kind of rule-following activity. Heisenberg, Wolf and Brembs (2001, p. 3) contend that operant behaviour can be explained by the following rule: "Continue a behaviour that reduces, and abandon a behaviour that increases, the deviation from the desired state." The abandonment referred to here could be called self-correction, if it takes place within the context of operant conditioning.

Control: what it is and what it is not

I wish to make it clear that I am not proposing that Drosophila consciously attends to its internal motor (or sensorimotor) map when it adjusts its angle of flight at the torque meter. To do that, it would have to not only possess awareness, but also be aware of its own internal states. In any case, the map is not something separate from the fly, which it can consult: rather, it is instantiated within the fly's body. We should picture the fly observing its environment, rather than the map itself. Nevertheless, the map is real, and not just a construct. The associations between motor patterns, sensory inputs and consequences (heat or no heat) are formed in the fly's brain, and the fly uses them to steer its way out of trouble. Additionally, the fly's efference copy enables it to monitor its own bodily movements, and it receives sensory feedback (via the visual display and the heat beam) when it varies its bodily movements.

On the other hand, we should not picture the fly as an automaton, passively following some internal program which tells it how to navigate. There would be no room for agency or control in such a picture. Rather, controlled behaviour originates from within the animal, instead of being triggered from without (as reflexes are, for instance). It is efferent, not afferent: the animal's nervous system sends out impulses to a bodily organ (Legrand, 2001). The animal also takes the initiative when it subsequently compares the output from the nervous system with its sensory input, until it finds a positive correlation (Wolf and Heisenberg, 1991, p. 699; Brembs, 1996, p. 3). Wolf and Heisenberg (1991, quoted in Brembs, 1996, p. 3, italics mine) define operant behaviour as "the active choice of one out of several output channels in order to minimize the deviations of the current situation from a desired situation", and operant conditioning as a more permanent behavioural change arising from "consistent control of a sensory stimulus."

Acts of control should be regarded as primitive acts: they are not performed by doing something else that is even more fundamental. However, the term "by" also has an adverbial meaning: it can describe the manner in which an act is performed. If we say that a fly controls its movements by following its internal map, this does not mean that map-following is a lower level act, but that the fly uses a map when exercising control. The same goes for the statement that the fly controls its movements by paying attention to the heat, and/or the color or patterns on the rotating arena.

Why an agent-centred intentional stance is required to explain operant conditioning

We can now explain why an agent-centred mentalistic account of operant conditioning is to be preferred to a goal-centred intentional stance. The former focuses on what the agent is trying to do, while the latter focuses on the goals of the action being described. A goal-centred stance has two components: an animal's goal and the information it has which helps it attain its goal. The animal is not an agent here: its goal-seeking behaviour is triggered by the information it receives from its environment.

We saw in part B that a goal-centred stance could adequately account for instrumental conditioning, which requires nothing more than information (about the animal's present state and end state) and a goal (or end state). Here, the animal does not need to fine-tune its motor patterns.

By contrast, our account of operant conditioning includes not only information (about the animal's present state and end state) and a goal (or end state), but also an internal representation of the means or pathway by which the animal can steer itself from where it is now towards its goal - that is, the sequence of movements and/or sensory stimuli that guides it to its goal. In operant conditioning, I hypothesise that the animal uses its memory of this "pathway" to continually fine-tune its motor patterns and correct any "overshooting". In this context, it makes sense to say that the animal believes that by following this pathway (sequence of bodily movements) it will attain what it wants (its goal).

Additionally, the animal's ability to update the "means-end" associations in its memory as circumstances change makes it natural to speak of the animal as revising its beliefs about how to get what it wants. (In the fly's case, when heat comes to be associated with an inverted T on its background arena, instead of an upright T, it adjusts not only its present but also its future behaviour.)

"But why should a fine-tuned movement be called an action, and not a reaction?" - The reason is that fine-tuned movement is self-generated: it originates from within the animal's nervous system, instead of being triggered from without. Additionally, the animal takes the initiative when it looks for a correlation between its fine motor outputs with its sensory inputs, until it manages to attain the desired state. Talk of action is appropriate here because of the two-way interplay between the agent adjusting its motor output and the new sensory information it receives from its environment.

The centrality of control in our account allows us to counter Gould's objection (2002, p. 41) that associative learning is too innate a process to qualify as cognition. In the case of instrumental conditioning, an organism forms an association between its own motor behaviour and a stimulus in its environment. Here the organism's learning content is indeed determined by its environment, while the manner in which it learns is determined innately by its neural hard-wiring. By contrast, in operant conditioning, both the content and manner of learning are determined by a complex interplay between the organism and its environment, as the organism monitors and continually controls the strength of its motor patterns in response to changes in its environment. One could even argue that an organism's ability to construct its own internal representations to control its movements enables it to "step outside the bounds of the innate", as a truly cognitive organism should be able to do (Gould, 2002, p. 41).

"But why should we call the fly's internal representation a belief?" - There are three powerful reasons for doing so. First, the fly's internal representation tracks the truth in a robust sense: it not only mirrors a state of affairs in the real world, but changes whenever the situation it represents varies. The fly's internal representation changes if it has to suddenly learn a new pathway to attain its goal.

Second, the way the internal representation functions in explaining the fly's behaviour is similar in important respects to the behavioural role played by human beliefs. If Ramsey's account of belief is correct, then the primary function of our beliefs is to serve as maps whereby we steer ourselves. The fly's internal representations fulfil this primary function admirably well, enabling it to benefit thereby. We have two options: either ascribe beliefs to flies, or ditch the Ramseyan account of belief in favour of a new one, and explain why it is superior to Ramsey's model.

Third, I contend that any means-end representation by an animal which is formed by a process under the control of the animal deserves to be called a belief. The process whereby an animal controls its behaviour was discussed above.

Davidson (1975) argued against the possibility of imputing beliefs and desires to animals, on the grounds that (i) beliefs and desires have a content which can be expressed in a sentence (e.g. to believe is to believe that S, where S is a sentence); (ii) it is always absurd to attribute belief or desire that S to an animal, because nothing in its behaviour could ever warrant our ascription to it of beliefs and desires whose content we can specify in a sentence containing our concepts. The animal is simply incapable of the relevant linguistic behaviour. I have already addressed objections relating to the propositional content of flies' beliefs, in part A, so I will not repeat them here.

Perhaps the main reason for the residual reluctance by some philosophers to ascribe beliefs to insects is the commonly held notion that beliefs have to be conscious. I shall defer my discussion of consciousness until chapter four. For the time being, I shall confine myself to two comments: (i) to stipulate that beliefs have to be conscious obscures the concept of "belief", as the notion of "consciousness" is far less tractable than that of "belief"; (ii) even granting that human beliefs are typically conscious, to at least some extent, it does not follow that beliefs in all other species of animals have to be conscious too.

A model of operant agency

We can now formulate a set of sufficient conditions for operant conditioning and what I call operant agency:

(i) innate preferences or drives;

(ii) innate motor programs, which are stored in the brain, and generate the suite of the animal's motor output;

(iii) a tendency on the animal's part to engage in exploratory behaviour;

(iv) an action selection mechanism, which allows the animal to make a selection from its suite of possible motor response patterns and pick the one that is the most appropriate to its current circumstances;

(v) fine-tuning behaviour: efferent motor commands which are capable of stabilising a motor pattern at a particular value or within a narrow range of values, in order to achieve a goal;

(vi) a current goal: the attainment of a "reward" or the avoidance of a "punishment";

(vii) sensory inputs that inform the animal whether it has attained its goal, and if not, whether it is getting closer to achieving it;

(viii) direct or indirect associations between (a) different motor commands; (b) sensory inputs (if applicable); and (c) consequences of motor commands, which are stored in the animal's memory and updated when circumstances change;

(ix) an internal representation (minimal map) which includes the following features:

(b) the animal's current goal (represented as a stored memory of the motor pattern or sensory stimulus that the animal associates with the attainment of the goal); and

(c) the animal's pathway to its current goal (represented as a stored memory of the sequence of motor movements or sensory stimuli which enable the animal to steer itself towards its goal);

(xi) a correlation mechanism, allowing it to find a temporal coincidence between its motor behaviour and the attainment of its goal;

(xii) self-correction, that is:

(b) abandonment of behaviour that increases, and continuation of behaviour that reduces, the animal's "distance" (or deviation) from its current goal; and

(c) an ability to form new associations and alter its internal representations (i.e. update its minimal map) in line with variations in surrounding circumstances that are relevant to the animal's attainment of its goal.

Definition - "operant conditioning"

An animal can be described as undergoing operant conditioning if the following features can be identified:

(x) the ability to store and compare internal representations of its current motor output (i.e. its efferent copy, which represents its current "position" on its internal map) and its afferent sensory inputs;

(a) the animal's current motor output (represented as its efference copy);

If the above conditions are all met, then we can legitimately speak of the animal as an intentional agent which believes that it will get what it wants, by doing what its internal map tells it to do.

(a) an ability to rectify any deviations in motor output from the range which is appropriate for attaining the goal;

Animals that are capable of undergoing operant conditioning can thus be said to exhibit a form of agency called operant agency.

Definition - "operant agency"

Operant conditioning is a form of learning which presupposes an agent-centred intentional stance.

Carruthers' arguments against inferring mental states from conditioning

We can now respond to Carruthers' (2004) sceptical argument against the possibility of attributing minds to animals, solely on the basis of what they have learned through conditioning:

...engaging in a suite of innately coded action patterns isn't enough to count as having a mind, even if the detailed performance of those patterns is guided by perceptual information. And nor, surely, is the situation any different if the action patterns aren't innate ones, but are, rather, acquired habits, learned through some form of conditioning.

Carruthers argues that the presence of a mind is determined by the animal's cognitive architecture - in particular, whether it has beliefs and desires. I agree with Carruthers that neither the existence of flexible patterns of behaviour in an animal nor its ability to undergo conditioning warrants our ascription of mental states to it (Conclusion F.5 and Conclusion L.14). However, we should not assume that there is a single core cognitive architecture underlying all minds. The cognitive architecture presupposed by operant conditioning may turn out to be quite different from that supporting other simple forms of mentalistic behaviour (which I discuss below).

Finally, I have already argued that because the internal representations used by animals in operant conditioning: (i) track the truth, insofar as they correct their own mistakes; (ii) possess map-like features, as typical beliefs do (which is why I call them minimal maps); and (iii) incorporate both means and ends, making intentional agency possible, they constitute bona fide beliefs.

Varner's arguments against inferring mental states from conditioning

Varner (1998) regards association as too mechanical a process to indicates the presence of mental states. He maintains that animals that are genuinely learning should be able to form primitive hypotheses about changes in their environment. I would contend that Varner has set the bar too high here. Forming a hypothesis is a more sophisticated cognitive task than forming a belief, as: (i) it demands a certain degree of creativity, insofar as it seeks to explain the facts; (ii) for any hypothesis, there are alternatives that are also consistent with the facts.

Varner (1998) proposes (following Bitterman, 1965) that reversal tests offer a good way to test animals' abilities to generate hypotheses. I discuss this idea in an addendum to the Appendix. I will simply mention here that at least one species of insect - the honeybee - passes Varner's test in flying colors.

The role of belief and desire in operant conditioning

I have argued above that the existence of map-like representations which underlie the two-way interaction between the animal's self-generated motor output and its sensory inputs during operant conditioning, require us to adopt an agent-centred intentional stance. By definition, this presupposes the occurrence of beliefs and desires. In the case of operant conditioning, the content of the agent's beliefs is that by following the pathway, it will attain its goal. The goal is the object of its desire.

In the operant conditioning experiments performed on Drosophila, we can say that the fly desires to attain its goal. The content of the fly's belief is that it will attain its goal by adjusting its motor output and/or steering towards the sensory stimulus it associates with the goal. For instance, the fly desires to avoid the heat, and believes that by staying in a certain zone, it can do so.

The scientific advantage of a mentalistic account of operant conditioning

What, it may be asked, is the scientific advantage of explaining a fly's behaviour in terms of its beliefs and desires? If we think of the animal as an individual, probing its environment and fine-tuning its movements to attain its ends, we can formulate and answer questions such as:

What kinds of means can it encode as pathways to its ends?

Why is it slower to acquire beliefs in some situations than in others?

How does it go about revising its beliefs, as circumstances change?

Criticising the belief-desire account of agency

The belief-desire account of agency is not without its critics. Bittner (2001) has recently argued that neither belief nor desire can explain why we act. A belief may convince me that something is true, but then how can it also steer me into action? A desire can set a goal for me, but this by itself cannot move me to take action. (And if it did, surely it would also steer me.) Even the combination of belief and desire does not constitute a reason for action. Bittner does not deny that we act for reasons, which he envisages as historical explanations, but he denies that internal states can serve as reasons for action.

While I sympathise with Bittner's objections, I do not think they are fatal to an agent-centred intentional stance. The notion of an internal map answers Bittner's argument that belief cannot convince me and steer me at the same time. As I fine-tune my bodily movements in pursuit of my object, the sensory feedback I receive from my probing actions shapes my beliefs (strengthening my conviction that I am on the right track) and at the same time steers me towards my object.

A striking feature of my account is that it makes control prior to the acquisition of belief: the agent manages to control its own body movements, and in so doing, acquires the belief that moving in a particular way will get it what it wants.

Nevertheless, Bittner does have a valid point: the impulse to act cannot come from belief. In the account of agency proposed above, the existence of innate goals, basic motor patterns, exploratory behaviour and an action selection mechanism - all of which can be explained in terms of a goal-centred intentional stance - were simply assumed. This suggests that operant agency is built upon a scaffolding of innate preferences, behaviours and motor patterns. These are what initially moves us towards our object.

Bittner's argument against the efficacy of desire fails to distinguish between desire for the end (which is typically an innate drive, and may be automatically triggered whenever the end is sensed) and desire for the means to it (which presupposes the existence of certain beliefs about how to achieve the end). The former not only includes the goal (or end), but also moves the animal, through innate drives. In a similar vein, Aristotle characterised locomotion as "movement started by the object of desire" (De Anima 3.10, 433a16). However, desire of the the latter kind presupposes the occurrence of certain beliefs in the animal. An object X, when sensed, may give rise to X-seeking behaviour in an organism with a drive to pursue X. This account does not exclude desire, for there is no reason why an innate preference for X cannot also be a desire for X, if it is accompanied by an internal map. Desire, then, may move an animal. However, the existence of an internal map can only be recognised when an animal has to fine-tune its motor patterns to attain its goal (X) - in other words, when the attainment of the goal is not straightforward.

Is my account falsifiable?

Although few if any philosophers today would insist on falsifiability as a condition of propositional meaningfulness, most scientists remain leery of hypotheses that are unfalsifiable. Fortunately, the account of operant conditioning which I have put forward is easily falsifiable, not only as regards its scope (e.g. are fruit flies capable of undergoing operant conditioning, as I suggest?) but also on a theoretical level.

The proposal that fruit flies are capable of undergoing operant conditioning would be refuted if a simpler mechanism (e.g. instrumental conditioning) were shown tobe able to account for their observed behaviour in flight simulator experiments.

Likewise, the theoretical basis of my account of operant conditioning would be severely weakened by the discovery that fruit flies rely on a single-stage process (not a two-stage process, as I suggested above) to figure out how to avoid the heat-beam in "fs-mode", or by evidence that there is no hard-and-fast distinction, at the neurological level, between instrumental and operant conditioning in animals undergoing conditioning.

Does operant conditioning require a first-person account?

It is not my intention to discuss the question of consciousness in fruit flies in this chapter. Here, I simply assume that they are not phenomenologically conscious. Does this mean that a third-person intentional stance is adequate to account for their behaviour?

In part A, I cautioned against equating "first-person" with "phenomenologically conscious", on the grounds that my conscious experiences constitute only a limited subset of all the things which I describe as happening to me.

Despite our attribution of agency, belief and desires even to lowly animals such as insects, the fact that we have characterised the difference between animals with and without beliefs in terms of internal map-like representations may suggest that a third-person account is still adequate to explain operant conditioning. Representations, after all, seem to be the sort of things that can be described from the outside.

Such an interpretation is mistaken, because it overlooks the dynamic features of the map-like representations which feature in operant beliefs. To begin with, there is a continual two-way interplay between the animal's motor output and its sensory input. Because the animal maintains an "efference copy" of its motor program, it is able to make an "inside-outside" comparison. That is, it can compare the sensory inputs arising from motor movements that are controlled from within, with the sensory feedback it gets from its external environment. To do this, it has to carefully monitor, or pay attention to, its sensory inputs. It has to be able to detect both matches (correlations between its movements and the attainment of its goal) and mismatches or deviations - first, in order to approach its goal, and second, in order to keep track of it. The notions of "control' and "attention" employed here highlight the vital importance of the "inside-outside" distinction. In operant conditioning, the animal gets what it wants precisely because it can differentiate between internal and external events, monitor both, and use the former to manipulate the latter.

In short: to attempt to characterise operant conditioning entirely from the outside is to ignore how it works. Some sort of (low-level) first-person account is required here.

In instrumental conditioning, the situation is much simpler: the animal obtains its goal simply by associating an event which happens to be internal (a general motor pattern in its repertoire) and an external event. No controlled fine-tuning is required. Here, what explains the animal's achieving its goal is not the fact that its motor pattern is internal, but rather the fact that the animal can detect temporal correlations between the motor behaviour and the response.

The current state of research into spatial learning in insects remains fluid. What is not disputed is that some insects (especially social insects, such as the ants, bees and wasps) employ a highly sophisticated system of navigation, and that they employ at least two mechanisms to find their way back to their nests: path integration (also known as dead reckoning) and memories of visual landmarks. A third mechanism - global (or allocentric) maps - has been proposed for honey bees, but its status remains controversial.

Path integration (dead reckoning)

The best studied mechanism is path integration, which allows insects to navigate on bare terrain, in the absence of visual landmarks. Collett and Collett (2002, p. 546) describe it as follows:

When a honeybee or desert ant leaves its nest, it continually monitors its path, using a sun and polarized light compass to assess its direction of travel, and a measure of retinal image motion (bees) or motor output (ants) to estimate the distance that it covers. This information is used to perform path integration, updating an accumulator that keep a record of the insect's net distance and direction from the nest.

The insect's working memory of its "global" position - its distance and direction from the nest - is continually updated as it moves. An insect's global vector allows it to return home in a straight line from any point in its path. Tests have shown that if a desert ant returning to its nest is moved to unfamiliar terrain, it continues on the same course for a distance roughly equal to the distance it was from the nest. Finding no nest, it then starts a spiral search for the nest. If obstacles are placed in its path, the ant goes around them and adjusts its course appropriately (Collett and Collett, 2002, p. 546; Gallistel, 1998, p. 24; Corry, 2003). When ants have to navigate around obstacles, they memorise the sequence of motor movements corresponding to movement around the obstacle, thereby cutting their information processing costs (Schatz, Chameron, Beugnon and Collett, 1999).

Landmark navigation

The other way by which insects navigate is the use of landmarks which they observe en route and at their home base. Naive foragers initially rely on path integration to find their way home, but when they repeat their journey, they learn the appearance of new landmarks and associate local vectors with them, which indicate the distance to the next landmark. With experience, these local vectors take precedence over the global vectors used in dead reckoning.

[T]he basic structure of each route segment is a landmark and an associated local vector or some other stereotyped movement (for example, turning left)... [T]he primary role of a landmark is to serve as a signpost that tells the insect what to do next, rather than as a positional marker... (Collett and Collett, 2002, p. 547).

A landmark navigation system can also work hand-in-hand with path integration: for instance, the Mediterranean ant uses the latter as a filter to screen out unhelpful landmarks, learning only those that can guide subsequent return trips to the nest - particularly those that are close to the nest (Schatz, Chameron, Beugnon and Collett, 1999). Visual landmarks help keep path vector navigation calibrated, as short local vectors are more precise than a route-long global vector, while the learning of visual landmarks is guided by path integration (Collett and Collett, 2002).

It is still not known how insects encode landmarks, and what features of the image are stored in its memory. However, it is agreed that ants, bees and wasps not only memorise landmarks, but guide their final approach to their goal by matching their visual image of the landmark with their stored image (snapshot?) of how it looks from the goal. It appears that when comparing their current view to a stored image, they use the retinal positions of the edges, spots of light, the image's centre of gravity and colour. They also learn the appearance of an object from more than one distance, as their path home is divided into separate segments, each guided by a separate view of the object. Additionally, consistency of view is guaranteed because the insect, following the sun or some other cue, always faces the object in the same direction. Finally, the insect's view of the distant panorama from a landmark can help to identify it (Collett and Collett, 2002).

Can we describe the navigation systems of insects as representational? If so, is it a map-like representation, like the one I proposed for operant conditioning, and does it qualify an insect to be a belief-holder?

Is path integration (dead reckoning) representational?

Gallistel (1998) regards the nervous system of an insect as a computational system and argues that the ability of insects to perform dead reckoning is strong evidence that their brains are symbol processors, which can represent their movements. The ability of insects' nervous systems to perform computations using the azimuth position of the sun and the pattern of polarised light in the sky, although impressive, does not entail that they have mental states (Conclusion C.3) - as we have seen, even viruses can compute.

More importantly, Gallistel contends that because there is a functional isomorphism between the symbols and rules in an insect's nervous system and events in the outside world, we can call it a representation. The nervous system contains vector symbols: neural signals which represent the insect's net displacement from the nest, and are continually updated as the insect moves about. The current sum of the displacements made by the insect while moving represents its present position and at the same time tell it how to get home.

As Gallistel puts it:

The challenge posed by these findings to those who deny symbol processing capacity in [insect] brains is to come up with a process that looks like dead reckoning but really is not (1998, p. 24).

Insects' ability to hold a course using a sun-compass and to determine distance from parallax are other examples of insect representation cited by Gallistel.

It was argued earlier that a bona fide representation should contain within itself the possibility of mis-representation (Conclusion R.1). The case of the displaced ant, which sets a path that would normally return it to its nest, seems to meet this criterion. Likewise, the honeybee has a built-in visual odometer that estimates distance travelled by integrating image motion over time. The odometer misreads distances if the bee collects food in a short, narrow tunnel (Esch, Zhang, Srinivasan and Tautz, 2001).

Corry (2003) points out that isomorphism is not a sufficient condition for a representation: the ability of system A to track system B does not mean that A represents B. However, he proposes that if an individual (e.g. an ant) uses a system (e.g. its internal home vector system) which is functionally isomorphic with the real world, in order to interact with the real world, then the system can be said to represent the real world. This condition is satisfied in the case of dead reckoning by an ant. Can we call such a representation a belief?

Ramsey proposed that a "belief of the primary sort is a map of neighbouring space by which we steer" (1990, p. 146). However, if we look at the path integration system alone, the representation fails to meet even the minimal conditions which I suggested in the section above for a minimal map, as neither the goal nor the current position is represented: only the directional displacement from the goal is encoded. Without an internal representation of one's goal, we cannot speak of agency, or of control. A naive foraging insect, relying solely on path integration, is merely following its internal compass, which is continually updated by events beyond its control.

The same remarks apply to the ability of monarch butterflies to navigate from as far north as Massachusetts to central Mexico, in autumn. Scientists have recently discovered that the monarch uses an in-built sun-compass and a biological clock to find its way (Mouritsen and Frost, 2002). Once again, the butterflies appear to be lack any internal representation of their goal (a place with warm, tropical weather where they can spend the winter, as they do not hibernate) or their destination (Mexico). Thus we cannot describe them as agents when they migrate.

Can landmarks serve as maps to steer by?

Path integration on its own works perfectly well even for an untrained insect: it does not require the insect to associate a motor pattern or sensory stimulus with attaining its goal. Navigation by landmarks, on the other hand, requires extensive learning. The location and features (colour, size, edge orientation and centre of gravity) of each landmark have to be memorised. Multiple views of each landmark have to be stored in the insect's brain. Additionally, some insects use panoramic cues to recognise local landmarks. Finally, a local vector has to be associated with each landmark (Collett and Collett, 1998, 2002). The fact that insects are capable of learning new goals and new patterns of means-end behaviour means that they satisfy a necessary condition for the ascription of mental states (Conclusion L.12) - though by itself not a sufficient one, as we have seen that associative learning can take place in the absence of mental states (Conclusion L.14).

While navigating by landmarks, insects such as ants and bees can learn to associate their final goal (the nest) with views of nearby landmarks, which guide them home, even when their final goal is out of sight. These insects often follow "a fixed route that they divide into segments, using prominent objects as sub-goals" (Collett and Collett, 2002, p. 547).

It is a matter of controversy whether insects possess a global (or allocentric) map of their terrain, which combines multiple views and movements in a common frame of reference (Giurfa and Menzel, 2003; Collett and Collett, 2002; Harrison and Schunn, 2003; Giurfa and Capaldi, 1999; Gould, 1986, 2002). A more complete discussion is contained within the Appendix. However, even if an insect has "only a piecemeal and fragmented spatial memory of its environment" (as suggested by Collett and Collett, 2002, p. 549), it clearly meets the requirements for a minimal map. Its current position is represented by the way its nervous system encodes its view of the external world (either as a visual snapshot or as a set of parameters), its short-term goal is the landmark it is heading for, and the path is its local vector, which the insect recalls when the landmark comes into view (Collett and Collett, 2002, pp. 546, 547, 549). The map-like representation employed here is a sensory map, which uses the visual modality.

What are the goals of navigating insects?

For a navigating insect, its long-term goals are food, warmth and the safety of the nest, all of which trigger innate responses. Short term goals (e.g. landmarks) are desired insofar as they are associated with a long term goal. Even long term goals change over time, as new food sources supplant old ones.

Additionally, insects have to integrate multiple goals, relating to the different needs of their community (Seeley, 1995; Hawes, 1995). In other words, they require an action selection mechanism. For example, a bee hive requires a reliable supply of pollen, nectar, and water. Worker field bees can assess which commodity seems to be in short supply within the hive, and search for it.

Are navigating insects agents?

The statement by Collett and Collett that "the primary role of a landmark is to serve as a signpost that tells an insect what to do next" (2002, p. 547), recalls Ramsey's claim that a belief is a map by which we steer. Should we then attribute agency, beliefs and desires to an insect navigating by visual landmarks?

Corry (2003) thinks not, since the insect is not consciously manipulating the symbols that encode its way home. It does not calculate where to go; its nervous system does. Corry has a point: representations are not mentalistic per se. As we have seen, the autonomic nervous system represents, but we do not say it has a mind of its own. Nevertheless, Corry's "consciousness" requirement is unhelpful. He is proposing that a physical event E (an insect following a home vector) warrants being interpreted as a mental event F (the insect is trying to find its way home) only if E is accompanied by another mental event G (the insect must be consciously performing the calculations), but he fails to stipulate the conditions that have to be satisfied in order for G to be met.

I would suggest that Ramsey's steering metaphor can help us resolve the question of whether insects navigate mindfully. The word "steer", when used as a transitive verb, means "to control the course of" (Merriam-Webster Online, 2004). Thus steering oneself suggests control of one's bodily movements - a mindful activity, on the account I am developing in this chapter. The autonomic nervous system, as its name suggests, works perfectly well without us having to control it by fine-tuning. It is presumably mindless. On the other hand, Drosophila at the torque meter needed to control its motor movements in order to stabilise the arena and escape the heat. Which side of the divide does insect navigation fall on?

Earlier, I proposed that an animal is controlling its movements if it can compare the efferent copy from its motor output with its incoming sensory inputs, and make suitable adjustments. The reason why this behaviour merits the description of "agency" is that fine-tuned adjustment is self-generated: it originates from within the animal's nervous system, instead of being triggered from without. This is an internal, neurophysiological measure of control, and its occurrence could easily be confirmed empirically for navigating insects.

In the meantime, one could use external criteria for the existence of control: self-correcting patterns of movement. The continual self-monitoring behaviour of navigating insects suggests that they are indeed in control of their bodily movements. For instance, wood ants subdivide their path towards a landmark into a sequence of segments, each guided by a different view of the same object (Collett and Collett, p. 543). Von Frisch observed that honeybees tend to head for isolated trees along their route, even if it takes them off course (Collett and Collett, p. 547). Insects also correct for changes in their environmental cues.

Two facts may be urged against the idea that a navigating insect is exercising control over its movements, when it steers itself towards its goal. First, insects appear to follow fixed routines when selecting landmarks to serve as their sub-goals (Collett and Collett, 2002, p. 543). Second, cues in an insect's environment (e.g. the panorama it is viewing) may determine what it remembers when pursuing its goal (e.g. which "snapshot" it recalls - see Collett and Collett, 2002, p. 545). In fact, it turns out that no two insects' maps are the same. Each insect's map is the combined outcome of:

(i) its exploratory behaviour, as it forages for food;(ii) its ability to learn about its environment;

(iii) the position and types of objects in its path;

(iv) the insect's innate response to these objects; and

(v) certain fundamental constraints on the kinds of objects that can serve as landmarks (Collett and Collett, 2002, pp. 543, 548-549).

As each insect has its own learning history and foraging behaviour, we cannot say that an insect's environment determines its map.

We argued above that control requires explanation in terms of an agent-centred intentional stance, as a goal-centred intentional stance is incapable of encoding the two-way interplay between the agent adjusting its motor output and the new sensory information it is receiving from its environment, which allows it to correct itself. In a goal-centred stance, the animal's goal-seeking behaviour is triggered by a one-way process: the animal receives information from its environment.

Finally, we cannot speak of agency unless there is trying on the part of the insect. The existence of exploratory behaviour, coupled with self-correcting patterns of movement, allows us to speak of the insect as trying to find food. Studies have also shown that when the landmarks that mark an insect's feeding site are moved, insects try to find the place where their view of the landmarks matches the view they see from their goal.

A model of agency in navigating insects

We can now set out the sufficient conditions for the existence of agency in navigating insects:

(i) innate preferences or drives;

(ii) innate motor programs, which are stored in the brain, and generate the suite of the animal's motor output;

(iii) a tendency on the animal's part to engage in exploratory behaviour, in order to locate food sites;

(iv) an action selection mechanism, which allows the animal to make a selection from its suite of possible motor response patterns and pick the one that is the most appropriate to its current circumstances;

(v) fine-tuning behaviour: efferent motor commands which are capable of steering the animal in a particular direction - i.e. towards food or towards a visual landmark that may help it locate food;

(vi) a current goal (long-term goal): the attainment of a "reward" (usually a distant food source);

EXTRA CONDITION:

(vii) visual sensory inputs that inform the animal about its current position, in relation to its long-term goal, and enable it to correct its movements if the need arises;

(viii) direct or indirect associations (a) between visual landmarks and local vectors; (b) between the animal's short term goals (landmarks) and long term goals (food sites or the nest). These associations are stored in the animal's memory and updated when circumstances change;

(ix) an internal representation (minimal map) which includes the following features:

(b) the animal's current goal (represented as a stored memory of a visual stimulus that the animal associates with the attainment of the goal) and sub-goals (represented as stored memories of visual landmarks); and

(c) the animal's pathway to its current goal, via its sub-goals (represented as a stored memory of the sequence of visual landmarks which enable the animal to steer itself towards its goal, as well as a sequence of vectors that help the animal to steer itself from one landmark to the next);

[NOT NEEDED:

(xii) self-correction, that is:

(b) abandonment of behaviour that increases, and continuation of behaviour that reduces, the animal's "distance" (or deviation) from its current goal; and

(c) an ability to form new associations and alter its internal representations (i.e. update its minimal map) in line with variations in surrounding circumstances that are relevant to the animal's attainment of its goal.

Definition - "navigational agency"

We are justified in ascribing agency to a navigating animal if the following features can be identified:

(vi*) sub-goals (short-term goals), such as landmarks, which the animal uses to steer itself towards its goal;

(x) the ability to store and compare internal representations of its current motor output (i.e. its efferent copy, which represents its current "position" on its internal map) and its afferent sensory inputs. Motor output and sensory inputs are linked by a two-way interaction;

(a) the animal's current motor output (represented as its efference copy);

(xi) a correlation mechanism, allowing it to find a temporal coincidence between its motor behaviour and the attainment of its goal;]

If the above conditions are all met, then the animal is said to exhibit navigational agency. Such an animal qualifies as an intentional agent which believes that it will get what it wants, by doing what its internal map tells it to do.

(a) an ability to rectify any deviations (or mismatches) between its view and its internally stored image of its goal or sub-goal - first, in order to approach its goal or sub-goal, and second, in order to keep track of it;

What do navigational agents believe and desire?

It has been argued that an animal's map-like means-end representation formed by a process under the control of the agent deserves to be called a belief, as it encodes the animal's current status, goal and means of attaining it. A navigating insect believes that it will attain its (short or long term) goal by adjusting its motor output and/or steering towards the sensory stimulus it associates with the goal.

Carruthers (2004) offers an additional pragmatic argument in favour of ascribing beliefs and desires to animals with mental maps:

If the animal can put together a variety of goals with the representations on a mental map, say, and act accordingly, then why shouldn't we say that the animal believes as it does because it wants something and believes that the desired thing can be found at a certain represented location on the map?

While I would endorse much of what Carruthers has to say, I should point out that his belief-desire architecture is somewhat different from mine. He proposes that perceptual states inform belief states, which interact with desire states, to select from an array of action schemata, which determine the form of the motor behaviour. I propose that the initial selection from the array of action schemata is not mediated by beliefs, which only emerge when the animal fine-tunes its selected action schema in an effort to obtain what it wants. Moreover, I propose a two-way interaction between motor output and sensory input. Finally, the mental maps discussed by Carruthers are spatial ones, whereas I also allow for motor or sensorimotor maps.

Does navigational agency require a first-person intentional stance?

An insect's navigational behaviour, like its operant behaviour, cannot be described purely from the outside, by a third-person intentional stance. The continual two-way interplay between the animal's motor output (efference copy), which is controlled from within its body, and its sensory feedback from its external environment, enables it to make an "inside-outside" comparison by paying attention to its sensory inputs. The notions of "control" and "attention" make sense only if we retain the "inside-outside" distinction. A first-person intentional stance is therefore required, even if the insect lacks consciousness.

What is a cephalopod?

Cephalopods are a class of the phylum Mollusca (molluscs) and are therefore related to bivalves scallops, oysters, clams, snails and slugs, tusk shells and chitons. Cephalopods include the pelagic, shelled nautiloids and the coeleoids (cuttlefish, squid and octopods, the group to which octopuses - not octopi - belong). Among molluscs, cephalopods are renowned for their large brains, while other molluscs (e.g. bivalves) lack even a head, let alone a proper brain.

An overview of the cephalopods, their relationships to other animals and their cognitive abilities can be found in the Appendix.

Fine tuning in octopuses

Most cephalopods have very flexible limbs, with unlimited degrees of freedom. Scientists have recently discovered that octopuses control the movement of their limbs by using a decentralised system, where most of the fine-tuning occurs in the limb itself:

...[A]n octopus moves its arms simply by sending a "move" command from its brain to its arm and telling it how far to move.The arm does the rest, controlling its own movement as it extends.

"There appears to be an underlying motor program... which does not require continuous central control," the researchers write (Noble, 2001).

"[E]ach arm is controlled by an elaborate nervous system consisting of around 50 million neurons" (Noble, 2001).

The following discussion focuses principally on the well-studied common octopus, Octopus vulgaris.

A model of agency involving tool use

The conditions for agency in tool use are similar to those for operant agency, except that there is no need for a temporal correlation mechanism, and the activity required to attain one's goal is performed with an external object (a tool), and not just one's body.

Beck (1980) proposed that in order to qualify as a tool user, an animal must be able to modify, carry or manipulate an item external to itself, before using it to effect some change in the environment (Mather and Anderson, 1998). Beck's definition of a tool is commonly cited in the literature, so I shall use it here. At the same time, I would like to note that Beck's yardstick, taken by itself, cannot tell us whether the tool-using behaviour it describes is fixed or flexible.

We can now formulate a set of sufficient conditions for agency in tool use:

NEW CONDITION: a tool - that is, an item external to the animal, which it modifies, carries or manipulates, before using it to effect some change in the environment (Beck, 1980);

(i) innate preferences or drives;

(ii) innate motor programs, which are stored in the brain, and generate the suite of the animal's motor output;

(iii) a tendency on the animal's part to engage in exploratory behaviour, by using its tools to probe its environment;

(iv) an action selection mechanism, which allows the animal to make a selection from its suite of possible motor response patterns and pick the one that is the most appropriate for the tool it is using and object it is used to get;

(v) fine-tuning behaviour: an ability to stabilise one of its motor patterns within a narrow range of values, to enable the animal to achieve its goal by using the tool;

(vi) a current goal: the acquisition of something useful or beneficial to the individual;

(vii) sensory inputs that inform the animal whether it has attained its goal with its tool, and if not, whether it is getting closer to achieving it;

(viii) associations between different tool-using motor commands and their consequences, which are stored in the animal's memory;

(ix) an internal representation (minimal map) which includes the following features: